Over the past few years in teaching and consulting with testers and test managers worldwide, I have noticed something interesting. On one hand, testers complain they hardly ever get user requirements adequate for testing. On the other hand, when discussing what to base tests upon, the main response is "user requirements."

When I suggest there are other ways to design and evaluate tests, the response is similar to the time I accidentally walked into the glass of the revolving door at the Portland airport- surprise, followed by intense laughter.

I'm serious, though. There really are other ways to design and evaluate tests besides requirements. First, let's look at why you may need to do this.

By the way, let me be quick to say that I am a big believer in having solid, testable requirements for any project. Without them, you are at risk for a multitude of problems, including not knowing if an acceptable solution has been delivered. However, I also know we must anticipate real-world situations in which we may not (or should I say "probably not") get testable requirements.

Here are just a few of the situations that may require other techniques than requirements-based testing.

"We don't do requirements well" - The title of this article is intentional. The word "defined" explicitly indicates that requirements are in a documented form that can be discussed, reviewed, managed and all the other things you do with things that are written - including filing them away and forgetting them. Hopefully, we'll not do the filing away and forgetting part.

Failing to create and manage requirements well is a cultural and a process problem. No matter how important the testers see requirements as being, unless the project team and customers sees them as important, you're not going to get them or at least to the degree you need them. So, even though you may rant and rave, at the end of the day you still don't have testable requirements.

Commercial Off-the-Shelf Software - Let's take the example of buying a commercial software product. You should have defined requirements, but not for the purpose of building the software. Your concerns relate to the fitness of use. At the least, your requirements will be a list of things the software should do for you. Hopefully, the requirements will also define desired or needed attributes such as performance and usability. However, these requirements will not describe the processing rules of the software or of your organization.

Your task then becomes to design tests that validate the software or system will do what you need it to do, how you need to do it.

Stale Requirements - Perhaps at one time in the past you had a set of current and correct requirements. However, the passing of time was not kind to them. Business has changed, laws and technology have changed and now those requirements are not even close to how the system or your organization looks today.

Lack of User or Customer Input - The project team, including testers, may want testable requirements. However, the users and customers simply will not provide them. Perhaps they can't reach agreement on what is desired. Or, they will not give it their time - "We have a business to run here. You do your job and we'll do ours." Sure, they'll tell you when you've missed the mark - they just have a problem telling you where the mark is. That's very frustrating, indeed.

People Think They are Writing Good Requirements - To some people, requirements are sufficient even though they contain vague words and omit important details. These people seldom review these kind of requirements and in reality, little can be done with them. They are created as a step in a process to say they have defined requirements for the purpose of compliance.

Incorrect Requirements - Remember the pie charts and research data that shows that most of the defects on a project originate in requirements? The problem is that even in well-defined requirements there are still errors.

Requirements Convey "What" not "How" - This is the correct nature of requirements. They should not convey how to implement a feature in the software. In addition, they should not convey how a user performs a task - that's what a use case does. So, when you design requirement-based tests you are focusing on the processing rules, not on the sequence of performing those rules.

This means that you will need more information than contained in requirements to design a complete test in any event.

Testing Using a Lifecycle Approach- In a lifecycle testing approach, items are reviewed and tested as they are created. In addition, the tests and their evaluation criteria are based on previously defined items such as concept of operations, requirements, use cases and design (both general and detailed).

For example, when testing code at a unit level, you can base some tests on requirements, but you will also need to use the detailed design for things such as interface testing, integration testing and detailed functional testing. You will also need to know the coding constructs for structural testing.

Other Methods of Test Design and Evaluation

Taking our eyes off requirements for a moment can reveal some interesting things. Here are some other places we can look for test sources.

User Scenarios

This is probably my favorite source of non-requirement based tests and is very well suited for user acceptance testing. You may be thinking, "Isn't this a good application for use cases?" You would be correct, except you may not have adequately defined use cases.

User scenarios are based on business or operational processes and how they are performed. Of course, this requires that you or someone else in the organization understand those processes. Even better, it would be great if those processes could be in a documented form someplace.

It is amazing that when the entire functional process for a task is defined, the individual paths or scenarios can become very apparent. Each of these paths or scenarios can be the basis for a test script. If test scripts don't appeal to you (and that's okay), then you can think of a series of test cases or conditions that will accomplish the testing of the process based on user scenarios.

The big question is where to find the knowledge of the processes. There are several possible sources of process information in most organizations:

User knowledge - You will have to interview them and work with them to map the process. It helps to speak with multiple users to get the full picture.

Training materials - Sometimes you will find process flow charts, etc. in training documents.

Business process re-engineering documentation - At some time in the past there may have been an effort to re-engineer processes. This is one deliverable from that type of effort.

System documentation - Yes, you may find documented processes there as well. Just make sure it is still current.

It takes a little practice to get good at mapping out the major process. I prefer to use flowcharting as the technique, since there are so many good flowcharting tools on the market. One of my favorite tools is SmartDraw (www.smartdraw.com). If you visit their web site, you will see some examples of defining processes with flowcharts.

One important thing to remember is to keep the level of detail general enough to manage the complexity. My guideline is to limit the major decisions shown in the chart to about five or six. Even this low number of decisions can yield twenty-five or more scenarios.

I do not advocate the need to test all scenarios unless the risk is high. You will need to prioritize and choose the scenarios that are the most likely and most critical.

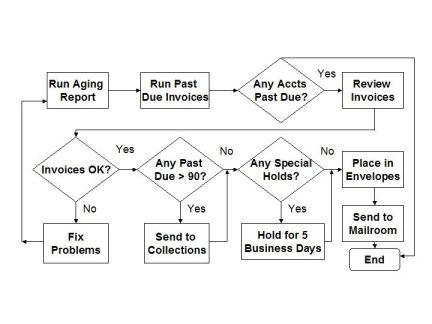

Here is how a typical process flow might look.

Figure 1 - Applying a Late Payment Penalty

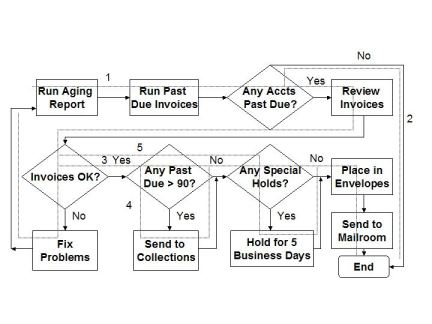

If we identify some of the scenarios paths, we can see:

Figure 2 - Distinct Scenarios in Applying a Late Payment Penalty

Usage Patterns

Usage patterns are just ways that people naturally use a system or software. For example, some people are partial to using a mouse for as many actions as possible. Other people use the mouse and keystrokes. Some people make maximum use of shortcuts, while others ask, "What's a control key?"

The problem with this source of tests is that if you try to test all the software functions in all the usage patterns, you won't have time to finish the test. For this reason, I usually recommend this type of testing be done in usability testing.

Generic Test Sources

There are at least fourteen sources of tests:

1. Field and data edits - Are the field edits and attributes correct?

2. Record handling - Is information processed correctly from point to point in the application without data loss?

3. File processing - Are files accessed and processed correctly?

4. Field and data relationships - When two or more data items are related, are the relationships correctly processed?

5. Matching and merging of data - When two or more data sources are involved, are the data merged together correctly?

6. Security - Are the data protected from malicious access, both internal and external to the organization?

7. Performance - Does the application have sufficient performance to meet user needs in terms of response times, throughput, handling of load, etc.? This can also include stress testing to simulate the conditions that cause the application to fail due to excessive load.

8. Control flows (processes and code) - Do the various control flows in the code perform functions as intended?

9. Procedures and documentation - Do procedures and documentation accurately describe the application's behavior and function?

10. Audit trails - Can transactions and functions be traced to a specific time and user?

11. Recovery - Can processing be recovered from an error state?

12. Ease of Use - Can someone in the intended user audience use the application without extensive training or additional skills? Can the functions in the application be performed easily?

13. Error Handling - Are errors (both expected and unexpected) handled correctly?

14. Search - Can data be queried and found in the application?

Generic Functionality

This is functionality that is contained in most applications, such as adding, updating, deleting and querying data. The catch is that these functions are performed differently from one application to the next.

Exploratory Testing

In the absence of any pre-defined knowledge of system or software behavior, exploratory testing can be a way to both learn and to test. You may find behavior that is obviously incorrect and you may also observe behavior that leads to further research to determine correctness. Although I think exploratory testing is a helpful method, my main concern is how to know if I'm seeing a bug or a "feature." Be prepared to spend significant time in research or reporting defects that are not really issues.

What is Correct Behavior?

We have looked at ways to define the test, but how do we know if the observed results are correct? In a requirements-based approach, the answer would be to look at the requirements. However, we need to consider other sources for our oracle.

Here are a few sources of expected application behavior:

An Independent Processing Source - Let's say you are testing a computation in a new system that is replacing an older system. To check the result you could perform the same computation on the older system and compare the results. Or, you could create another source, such as a spreadsheet to have an independent source of comparison. Your approach would depend on what is available and what it would take to create something new.

Users - In some cases, you can ask a user to assess the correctness of your results. This could even be a part of acceptance testing or sign off. Of course, this depends on the knowledge, willingness and availability of users.

Other Subject Matter Experts - Your oracle may be a person who is not a user, but understands the processing rules enough to evaluate the results.

System Documentation - The quality (accuracy, detail and clarity) of the documentation is the key factor for its use in evaluating test results. This documentation can include user guides as well as technical design documents.

Training Materials - These may be detailed enough for test evaluation. At the very least, you should be able to verify that the documentation is accurate.

Before totally dismissing the above methods in favor of requirements, consider that even good requirements can have errors. So these methods can be another source of expected results even when requirements are defined.

Summary

For many years people have understood that in dealing with software and systems there may well be a difference between how a system is described on paper and how it is actually intended to behave. This difference is addressed in two views of test and evaluation: verification and validation.

Verification is based on project deliverables and evaluates something to determine if it has been built correctly according to specifications or the "paper world." This is primarily the developer perspective. Validation is based on evaluating something based on user need or simulated real world conditions. The intent is to determine fitness for use from the customer or user perspective. Without both perspectives, you are at risk of building or acquiring something that meets specifications, but is unfit for use.

Requirements-based testing is verification. It is a solid and valuable technique if you have clear testable requirements. The techniques discussed in this article are ways to test from the real-world usage perspective and can be considered validation.

Unfortunately, testers have been trained for so many years that the only way to test is based on requirements that many have forgotten or never learned other methods of testing. I something refer to this situation as "The Lost Art of Validation."

Remember that there are several phases and many types of testing. Requirements can define what should be tested and what defines correct behavior for some of these test phases and types, but not all of them. Requirements are best applied at the system phase of testing. The danger of basing user acceptance testing on requirements is that they may not accurately or completely define what is needed or desired.

Hopefully, this article has helped expand or reinforce your views of validation. In addition, I hope it gives you some new techniques to use in your test design and evaluation.